Party Bot

ECE 5725 Spring 2023

Michael Wu (mw773) and Tony Yang (txy3)

Demonstration Video

Introduction

Music plays an essential role in everyone's life. Whether at home, a concert, or a party, music is constantly played regardless of the setting. Therefore, for our final project, we created a party robot that embodies all aspects of a quintessential DJ and party. The robot plays the music of the user's choice through JBL speakers connected to the Raspberry Pi and dances to the song using omnidirectional wheels.

Project Objective

- Our first objective for this project is to play music by selecting a song on the piTFT through the Raspberry Pi

- Our second objective for this project is to make a robot dance (move in multiple directions)

- Our last objective for this project is to make the robot move at a different pace depending on the selected song's

Design

Hardware Design

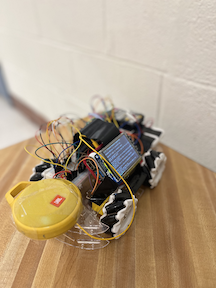

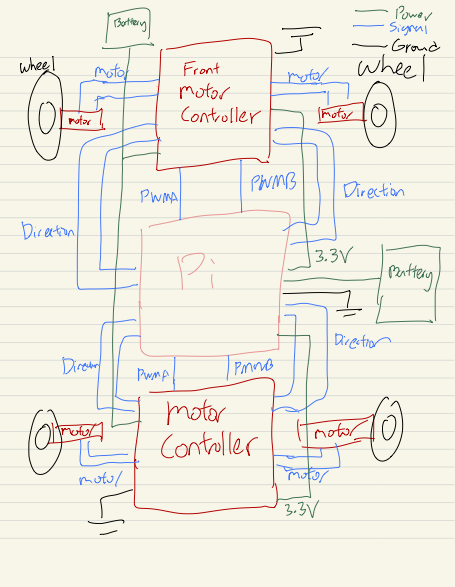

Figure 1: Diagram of our motors and breadboard circuit connected to the Raspberry Pi

For the circuitry of our robot, we used two different motor controllers to control the wheels attached to the robot. One motor controller controls the front two wheels of the robot while the other motor controller controls the back two wheels. To run each of the motors, two signal pins are connected from the motor controller to the motor. Each motor controlled by the motor controller requires two directional pins, one PWM pin, and a VCC signal coming from the Raspberry Pi, the power coming from a connected external battery pack, and ground. The signals that are connected to the raspberry pi all have a current-limiting resistor attached to their circuitry in order to avoid damage to the pins.

Software Design

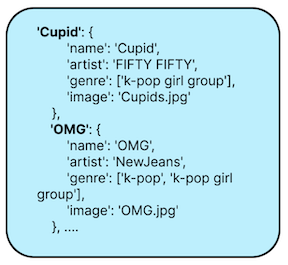

The first step of our software impementation was using Spotify API to collect music data. Specifically, we used Spotipy, a lightweight Python library for the Spotify Web API, that had built in functions to make API calls. We decided we wanted to use a predetermined set list of songs for the user to select from so we made GET requests to get the associated information related to a track such as the track name, track artist, genre, and track cover image. Since API calls are asynchronous, we would need to constantly wait for the API request to go to completion before we could preprocess the data. Thus, we made a list of all the track ids and then looped through each of them to create of list of responses containing data on each of the 15 songs. From this point, we saved the songs as a dictionary with keys as their name and their associated data and information as values.

Figure 2: Saved dictionary of song data

After retrieving all the information related to songs, we displayed each of the songs on the PiTFT. To do this, we used pygame to display each of the song names, limiting each page to only five songs. We implemented arrow buttons at the bottom of the screen to allow users to move on the previous page or the next page of songs. We decided that our list of songs would be fixed rather than being in a random order each time to prevent confusion and save time finding songs. When the user presses on the song name displayed on the piTFT, they will be directed to a new screen with the the song's album picture, song name, artist. Additionally, there is a play and pause button which are used to control the song, volume button for adjusting the volume of the music, a skip forward, and a skip backward button.

For the buttons related to controlling the music, we use pygame's mixer module to control the music. When the song is first selected, we load the .wav file The song initially starts at a paused state, so when the user presses the play button it will start playing the song at half volume. Similarly, when the user presses the pause button the song will stop playing. The skip forward button will skip to the next song in the song list and the skip backward button will go to the previous song on the list.

The last software component to our system is the movement of the robot. We used four motors to control the omnidirectional wheels so we needed to connect each of them to the correct GPIO input and outputs. We implemented our system so that the robot would move based on the genre of the selected song. For example, if the song is a faster paced song, then the robot would move faster compared to a slower paced song. We created several movement sequences, such as going forward or turning directions, and randomly selected (using a random number generator to randomize the possible movements) a bunch of movement sequences for the robot to execute.

Process and Testing

The design of our project changed significantly over the weeks. On top of our robot being able to play and dance to music, we initially planned to use the Pi Camera so that users could take pictures with different filters (selectable from the piTFT). We tested out additional camera filters that were a part of the camera module. Although these filters were cool and could have been a great fun feature for our project, enabling the legacy camera on the Raspberry Pi made it so that we could not play audio through the piTFT using the pygame mixer package. Thus, we decided that incorporating the Pi Camera with our robot was a good idea but unnecessary.

To test whether our piTFT correctly displayed the contents for our system, we needed to click on each part of the screen manually. One of the first things we needed to test for on our piTFT is whether the arrow buttons worked for moving through the song menu and whether pressing the song title would bring us to the song info page. Once we completed that implementation stage, we had to ensure each song's artist name, title, and album cover was displaying correctly. The most complex feature that we implemented using the piTFT was playing, pausing, and skipping through songs. We had to ensure that when we skipped a piece, it would start initially paused and require the user to press the play button to start the song.

Additionally, to ensure that our Spotify API calls using the spotipy package were correct, we printed out the output to check whether we were retrieving accurate information about the song. We often faced API key issues and got errors saying that we were making too many API calls. To work around this obstacle, we took a select list of songs. We saved each song's information into a dictionary for future use, so we didn't need to constantly make asynchronous API calls to retrieve information about a specific song.

Lastly, for the robot's movement, we had to check whether the motor was moving in the correct direction. More importantly, we made sure the robot's motors moved at a different frequency depending on the song's genre. For example, if the song the user selected is a pop song, the robot would be programmed to move at a faster pace compared to a more slow-paced song. To fully test our robot, we would start by selecting a song on the piTFT, press the play button and then watch how the robot moves to the music.

Results and Conclusion

Although we were unable to include the Pi Camera in our final project, the party robot works fine without it, and perhaps even better. Our robot was able to successfully provide the user the option to select from a variety of songs and play the song while dancing to the music.

This project was a fun and great opportunity to learn about circuit design, schedule planning, and new APIs. Since we used many motors for this project, we had to know which GPIO pins we could use on the breadboard. Additionally, this project taught us how to decide which deliverables to complete and which ones to drop when time was running out. There were some weeks when we faced sickness or busy schedules, so we fell behind some weeks and learned to make adjustments.

Overall, we created a robot that played music and danced to it. We found out that adding LEDs around the robot was inconvenient because we were running out of space on our robot, and the camera needed to be fixed for us. In the future, we can find ways to make the robot move to the music's rhythm by doing some sound preprocessing and analysis. We decided to make our robot move based on the genre of music that is playing.

Work Distribution

Michael Wu

mw773@cornell.edu

Built the robot (omnidirectional wheels, motors, chasis) and implemented movement

Display song data and information on the piTFT

Tony Yang

txy3@cornell.edu

Used Spotify API to extract song information and aggregated data

Tested the overall system and focused on debugging robot movement

Parts List

- Raspberry Pi $35.00

- EMOZNY Mecanum Wheel Robot Kit $35.00

- Resistors and Wires - Provided in lab

Total: $70.00

References

PiCamera DocumentTower Pro Servo Datasheet

Bootstrap

Pigpio Library

R-Pi GPIO Document

Spotipy

Code Appendix

// pg.py

import pygame

import os

import RPi.GPIO as GPIO

import random

import time

os.putenv('SDL_VIDEODRIVER', 'fbcon') # Display on piTFT

os.putenv('SDL_FBDEV', '/dev/fb0')

os.putenv('SDL_MOUSEDRV', 'TSLIB') # Track mouse clicks on piTFT

os.putenv('SDL_MOUSEDEV', '/dev/input/touchscreen')

#pygame initialization

pygame.init()

size = width, height = 320, 240

#defining colors we are using in pygame in RGB

black = 0, 0, 0

white = 255, 255, 255

dark_grey = 90, 90, 90

#Intializae GPIO pins

GPIO.setmode(GPIO.BCM)

GPIO.setup(27, GPIO.IN) #Initialize pin for break button

GPIO.add_event_detect(27, GPIO.RISING)

screen = pygame.display.set_mode(size)

screen.fill(white)

pygame.display.flip()

# List of track names we are going to use

tracks = ['Cupid', 'OMG', 'Ditto', 'Hotel Room Service', 'Lovesick Girls',

'Love Story', 'Blinding Lights', 'Umbrella', 'Levitating', 'Pepas',

'Dancing Queen', 'Mr. Brightside', 'Glimpse of Us', 'Die For You',

'La La Lost You', 'Oceans & Engines']

# Data collected from Music class using spotify api put into json format for easy scaling

song_dict = {

'Cupid': {

'name': 'Cupid',

'artist': 'FIFTY FIFTY',

'genre': ['k-pop girl group'],

'image': 'Cupids.jpg'

},

'OMG': {

'name': 'OMG',

'artist': 'NewJeans',

'genre': ['k-pop', 'k-pop girl group'],

'image': 'OMG.jpg'

},

'Ditto': {

'name': 'Ditto',

'artist': 'NewJeans',

'genre': ['k-pop', 'k-pop girl group'],

'image': 'Ditto.jpg'

},

'HotelRoomService': {

'name': 'Hotel Room Service',

'artist': 'Pitbull',

'genre': ['dance pop', 'miami hip hop', 'pop'],

'image': 'HotelRoomService.jpg'

},

'LovesickGirls': {

'name': 'Lovesick Girls',

'artist': 'BLACKPINK',

'genre': ['k-pop', 'k-pop girl group', 'pop'],

'image': 'LovesickGirls.jpg'

},

'LoveStory': {

'name': 'Love Story',

'artist': 'Taylor Swift',

'genre': ['pop'],

'image': 'LoveStory.jpg'

},

'BlindingLights': {

'name': 'Blinding Lights',

'artist': 'The Weeknd',

'genre': ['canadian contemporary r&b', 'canadian pop', 'pop'],

'image': 'BlindingLights.jpg'

},

'Umbrella': {

'name': 'Umbrella',

'artist': 'Rihanna',

'genre': ['barbadian pop', 'pop', 'urban contemporary'],

'image': 'Umbrella.jpg'

},

'Levitating': {

'name': 'Levitating',

'artist': 'Dua Lipa',

'genre': ['dance pop', 'pop', 'uk pop'],

'image': 'Levitating.jpg'

},

'Pepas': {

'name': 'Pepas',

'artist': 'Farruko',

'genre': ['reggaeton', 'trap latino', 'urbano latino'],

'image': 'Pepas.jpg'

},

'DancingQueen': {

'name': 'Dancing Queen',

'artist': 'ABBA',

'genre': ['europop', 'swedish pop'],

'image': 'DancingQueen.jpg'

},

'MrBrightside': {

'name': 'Mr. Brightside',

'artist': 'The Killers',

'genre': ['alternative rock', 'dance rock', 'modern rock', 'permanent wave', 'rock'],

'image': 'MrBrightside.jpg'

},

'GlimpseofUs': {

'name': 'Glimpse of Us',

'artist': 'Joji',

'genre': ['viral pop'],

'image': 'GlimpseofUs.jpg'

},

'DieForYou': {

'name': 'Die For You',

'artist': 'The Weeknd',

'genre': ['canadian contemporary r&b', 'canadian pop', 'pop'],

'image': 'DieForYou.jpg'

},

'LaLaLostYou': {

'name': 'La La Lost You',

'artist': '88rising',

'genre': ['asian american hip hop'],

'image': 'LaLaLostYou.jpg'

},

'OceansEngines': {

'name': 'Oceans & Engines',

'artist': 'NIKI',

'genre': ['indonesian r&b'],

'image': 'OceansEngine.jpg'

}

}

def transform(nested_list):

regular_list = []

for ele in nested_list:

if type(ele) is list:

regular_list.append(ele)

else:

regular_list.append([ele])

return regular_list

# Defining genre lookup

genres = []

song_values = song_dict.items()

for key, value in song_values:

genres.append(value['genre'])

flatlist = [element for sublist in genres for element in sublist]

unique_genre_list = list(set(flatlist))

# Handling helper for when the screen is pressed

def press_handling():

for event in pygame.event.get():

if event.type == pygame.MOUSEBUTTONDOWN:

pos = event.pos

button_down(pos)

elif event.type == SONG_END:

stop()

screen_state = 0

display_screen(0)

started_playing = False

# A customized sleep function that periodically check for user inputs

def custom_sleep(t):

loops = t * 10

for i in range(loops):

if not song_playing:

break

press_handling()

if not song_playing:

break

time.sleep(0.1)

# Pin intialization for the motors

# F and B represent the front motorcontroller and back motorcontroller respectively

F_PWMA = 12

F_PWMB = 4

F_AI1 = 21

F_AI2 = 23

F_BI1 = 19

F_BI2 = 17

B_PWMA = 26

B_PWMB = 22

B_AI1 = 16

B_AI2 = 20

B_BI1 = 6

B_BI2 = 5

# Initializing the pins

GPIO.setup(F_PWMA, GPIO.OUT)

GPIO.setup(F_PWMB, GPIO.OUT)

GPIO.setup(F_AI1, GPIO.OUT)

GPIO.setup(F_AI2, GPIO.OUT)

GPIO.setup(F_BI1, GPIO.OUT)

GPIO.setup(F_BI2, GPIO.OUT)

GPIO.setup(B_PWMA, GPIO.OUT)

GPIO.setup(B_PWMB, GPIO.OUT)

GPIO.setup(B_AI1, GPIO.OUT)

GPIO.setup(B_AI2, GPIO.OUT)

GPIO.setup(B_BI1, GPIO.OUT)

GPIO.setup(B_BI2, GPIO.OUT)

# Initializing the PWM signals for the motors

pf1 = GPIO.PWM(F_PWMA, 50)

pf2 = GPIO.PWM(F_PWMB, 50)

pb1 = GPIO.PWM(B_PWMA, 50)

pb2 = GPIO.PWM(B_PWMB, 50)

# Defining different speeds for the motor

full = 100.0

fast = 75.0

half = 50.0

slow = 35.0

# Defining the direction for the motor

forward = True

back = False

# Stop helper function for front motor 1

def stop_f1():

pf1.stop()

GPIO.output(F_AI1, GPIO.LOW)

GPIO.output(F_AI2, GPIO.LOW)

# Run helper function for front motor 1 with speed given as argument

def run_f1(speed, direction):

stop_f1()

if direction:

GPIO.output(F_AI1, GPIO.HIGH)

else:

GPIO.output(F_AI2, GPIO.HIGH)

pf1.start(speed)

def stop_f2():

pf2.stop()

GPIO.output(F_BI1, GPIO.LOW)

GPIO.output(F_BI2, GPIO.LOW)

def run_f2(speed, direction):

stop_f2()

if direction:

GPIO.output(F_BI1, GPIO.HIGH)

else:

GPIO.output(F_BI2, GPIO.HIGH)

pf2.start(speed)

def stop_b1():

pb1.stop()

GPIO.output(B_AI1, GPIO.LOW)

GPIO.output(B_AI2, GPIO.LOW)

def run_b1(speed, direction):

stop_b1()

if direction:

GPIO.output(B_AI1, GPIO.HIGH)

else:

GPIO.output(B_AI2, GPIO.HIGH)

pb1.start(speed)

def stop_b2():

pb2.stop()

GPIO.output(B_BI1, GPIO.LOW)

GPIO.output(B_BI2, GPIO.LOW)

def run_b2(speed, direction):

stop_b2()

if direction:

GPIO.output(B_BI1, GPIO.HIGH)

else:

GPIO.output(B_BI2, GPIO.HIGH)

pb2.start(speed)

# Helper function to stop all motors

def stop():

stop_f1()

stop_f2()

stop_b1()

stop_b2()

return

# Helper function to set all motors forward

def forward(speed):

global forward

run_f1(speed, forward)

run_f2(speed, forward)

run_b1(speed, forward)

run_b2(speed, forward)

return

# Helper function to set all motors backwards

def backward(speed):

global back

run_f1(speed, back)

run_f2(speed, back)

run_b1(speed, back)

run_b2(speed, back)

return

def turn_left(speed):

global forward, back

run_f1(speed, forward)

run_f2(speed, back)

run_b1(speed, forward)

run_b2(speed, back)

return

def turn_right(speed):

global forward, back

run_f1(speed, back)

run_f2(speed, forward)

run_b1(speed, back)

run_b2(speed, forward)

return

# Helper to make the robot slide sideways towards the right

def set_right(speed):

global forward, back

run_f1(speed, forward)

run_f2(speed, back)

run_b1(speed, back)

run_b2(speed, forward)

return

def set_left(speed):

global forward, back

run_f1(speed, back)

run_f2(speed, forward)

run_b1(speed, forward)

run_b2(speed, back)

return

# A subsequence of movement consisting of the robot moving forward then turning

def forward_twist(speed):

forward(speed)

custom_sleep(1)

turn_right(speed)

custom_sleep(1)

turn_left(speed)

custom_sleep(1)

return

# A subsequence of movement consisting of the robot moving backwards then turning

def back_twist(speed):

backward(speed)

custom_sleep(1)

turn_left(speed)

custom_sleep(1)

turn_right(speed)

custom_sleep(1)

return

# A subsequence of movement consisting of the robot turning right for a specific interval

def spin_right(speed):

turn_right(speed)

custom_sleep(3)

return

# A subsequence of movement consisting of the robot turning left for a specific interval

def spin_left(speed):

turn_left(speed)

custom_sleep(3)

return

# A subsequence of movement consisting of the robot moving forward then backwards

def for_back(speed):

forward(speed)

custom_sleep(3)

stop()

custom_sleep(3)

backward(speed)

custom_sleep(3)

return

def left_right_step(speed):

set_left(speed)

custom_sleep(3)

set_right(speed)

custom_sleep(3)

return

def right_left_step(speed):

set_right(speed)

custom_sleep(3)

set_left(speed)

custom_sleep(3)

return

def left_for_right(speed):

set_left(speed)

custom_sleep(3)

forward(speed)

custom_sleep(3)

set_right(speed)

custom_sleep(3)

backward(speed)

return

# Variable indicating if a song is currently playing, the robot only moves if this variable is True

song_playing = False

# Function determining the movement for songs in all rock genre

def rock_movement():

global full, fast, half, slow, song_playing

chance = random.uniform(0, 1) # Utilize random number generator to randomize the possible movements robot can take

if chance < 0.2:

forward_twist(slow)

elif chance < 0.5:

spin_right(slow)

elif chance > 0.8:

spin_left(slow)

else:

for_back(slow)

if song_playing: # If song is still playing, loop back to the movement again

rock_movement()

else:

stop()

# Function determining the movement for songs in all pop genre

def pop_movement():

global full, fast, half, slow, song_playing

chance = random.uniform(0, 1)

if chance < 0.2:

left_right_step(full)

elif chance < 0.4:

forward_twist(full)

elif chance < 0.6:

spin_right(full)

elif chance < 0.5:

spin_left(full)

else:

for_back(full)

if song_playing:

pop_movement()

else:

stop()

def kpop_movement():

global full, fast, half, slow, song_playing

chance = random.uniform(0, 1)

if chance < .15:

left_right_step(full)

elif chance < .3:

right_left_step(full)

elif chance < .45:

forward_twist(full)

elif chance < .6:

for_back(full)

else:

left_for_right(full)

if song_playing:

kpop_movement()

else:

stop()

def rb_movement():

global full, fast, half, slow, song_playing

chance = random.uniform(0, 1)

if chance < .2:

spin_right(slow)

elif chance < .4:

spin_left(slow)

elif chance < .6:

forward_twist(slow)

elif chance < .8:

for_back(slow)

else:

left_right_step(slow)

if song_playing:

rb_movement()

else:

stop()

def hiphop_movement():

global full, fast, half, slow, song_playing

chance = random.uniform(0, 1)

if chance < 0.2:

forward_twist(full)

elif chance < 0.5:

spin_right(full)

elif chance > 0.8:

spin_left(full)

else:

for_back(full)

if song_playing:

hiphop_movement()

else:

stop()

def lat_movement():

global full, fast, half, slow, song_playing

chance = random.uniform(0, 1)

if chance < 0.2:

forward_twist(full)

elif chance < 0.5:

spin_right(full)

elif chance > 0.8:

spin_left(full)

else:

for_back(full)

if song_playing:

lat_movement()

else:

stop()

cur_gen = None # The genre for the current song in play

# Helper function to identify which genre each subgroup of genre belon to and call the movement

def findPace(genre):

if genre in ["dance rock", "modern rock", "rock", "alternative rock"]:

rock_movement()

elif genre in ["dance pop", "uk pop", "europop", "pop", "swedish pop", "barbadian pop", "canadian pop", "viral pop"]:

pop_movement()

elif genre in ["kpop", "k-pop girl group", "k-pop"]:

kpop_movement()

elif genre in ["indonesian r&b", "canadian contemporary r&b"]:

rb_movement()

elif genre in ["miami hip hop", "asian american hip hop"]:

hiphop_movement()

elif genre in ["urban contemporary", "trap latino", "urbano latino", "permanent wave", "reggaeton"]:

lat_movement()

return

# Left and right arrows images for the home screen

left_arr = pygame.image.load("left.png")

right_arr = pygame.image.load("right.png")

left_arr = pygame.transform.scale(left_arr, (60, 20))

right_arr = pygame.transform.scale(right_arr, (60, 20))

left_rect = left_arr.get_rect()

right_rect = right_arr.get_rect()

left_rect.center = (40, 210)

right_rect.center = (280, 210)

def init():

pygame.init()

# Fill the screen with white background color

def reset_screen():

screen.fill(white)

pygame.display.flip()

# display the left and rigth arrow buttons

def left_arrow_button():

global left_arr, left_rect

screen.blit(left_arr, left_rect)

pygame.display.flip()

def right_arrow_button():

global right_arr, rigth_rect

screen.blit(right_arr, right_rect)

pygame.display.flip()

def song_list_title():

title_txt = pygame.font.Font(None, 24).render(

"Song List", True, black)

title_rect = title_txt.get_rect(center=(160, 25))

screen.blit(title_txt, title_rect)

pygame.display.flip()

# Array for displaying which 5 songs are displayed on the home screen at all times

song_index = 0

cur_songs = [None] * 5

cur_songs[0] = tracks[0]

cur_songs[1] = tracks[1]

cur_songs[2] = tracks[2]

cur_songs[3] = tracks[3]

cur_songs[4] = tracks[4]

# Moving the array to the next 5 songs on the overall song array

def list_forward(t):

global song_index

if t:

song_index += 5

else:

song_index -= 5

for i in range(5):

if i + song_index < len(tracks):

cur_songs[i] = tracks[i + song_index]

else:

cur_songs[i] = None

# left arrow is arrows[0] and right arrow is arrows[1]

arrows = [[0, 65, 200, 240], [255, 320, 200, 240]]

# Dispalying the song name on piTFT screen

def hard_code_songs():

if cur_songs[0]:

song1_txt = pygame.font.Font(None, 16).render(

cur_songs[0], True, black)

song1_rect = song1_txt.get_rect(center=(160, 65))

screen.blit(song1_txt, song1_rect)

if cur_songs[1]:

song2_txt = pygame.font.Font(None, 16).render(

cur_songs[1], True, black)

song2_rect = song2_txt.get_rect(center=(160, 95))

screen.blit(song2_txt, song2_rect)

if cur_songs[2]:

song3_txt = pygame.font.Font(None, 16).render(

cur_songs[2], True, black)

song3_rect = song3_txt.get_rect(center=(160, 125))

screen.blit(song3_txt, song3_rect)

if cur_songs[3]:

song4_txt = pygame.font.Font(None, 16).render(

cur_songs[3], True, black)

song4_rect = song4_txt.get_rect(center=(160, 155))

screen.blit(song4_txt, song4_rect)

if cur_songs[4]:

song5_txt = pygame.font.Font(None, 16).render(

cur_songs[4], True, black)

song5_rect = song5_txt.get_rect(center=(160, 185))

screen.blit(song5_txt, song5_rect)

pygame.display.flip()

screen_state = 0 # state of screen, song list choosing is 0 and the mp3 displaying a specific sogn is screen state 1

click_song_display = None # the display name of the current in display song

click_song = None # the internal name of the current in display song

cur_song_index = None # index of the song in the overall song list

# matching the display name of the song to the internal name of the song

name_dt = {"Cupid": "Cupid", "OMG": "OMG", "Ditto": "Ditto", "Hotel Room Service": "HotelRoomService", "Lovesick Girls": "LovesickGirls",

"Love Story": "LoveStory", "Blinding Lights": "BlindingLights", "Umbrella": "Umbrella", "Levitating": "Levitating", "Pepas":

"Pepas", "Dancing Queen": "DancingQueen", "Mr. Brightside": "MrBrightside", "Glimpse of Us": "GlimpseofUs", "Die For You":

"DieForYou", "La La Lost You": "LaLaLostYou", "Oceans & Engines": "OceansEngines"}

def map_name(x):

global click_song

click_song = name_dt[x]

def song_options_control():

quit_txt = pygame.font.Font(None, 16).render("End Program", True, black)

quit_rect = quit_txt.get_rect(center=(260, 100))

screen.blit(quit_txt, quit_rect)

# Load and display the album cover, artist name, and song name of the selected song

def display_song_options():

global click_song_dislay, click_song

reset_screen()

img_direct = "/home/pi/Final/images/" + click_song + ".jpg" #

song_pic = pygame.image.load(img_direct)

song_pic = pygame.transform.scale(song_pic, (100, 100))

song_rect = song_pic.get_rect()

song_rect.center = (80, 80)

screen.blit(song_pic, song_rect)

pygame.display.flip()

name_txt = pygame.font.Font(None, 20).render(

click_song_display, True, black)

name_rect = name_txt.get_rect(center=(80, 160))

screen.blit(name_txt, name_rect)

singer_txt = pygame.font.Font(None, 20).render(

song_dict[click_song]["artist"], True, black)

singer_rect = singer_txt.get_rect(center=(80, 190))

screen.blit(singer_txt, singer_rect)

pygame.display.flip()

# Code in pygame for displaying the mp3 feature buttons including fast forward/backwards, and volume up down

# and pausing/playing

def display_song_buttons():

global song_playing

if not song_playing:

play_img = pygame.image.load("/home/pi/Final/buttons/play.png")

else:

play_img = pygame.image.load("/home/pi/Final/buttons/pause.jpeg")

play_img = pygame.transform.scale(play_img, (60, 60))

play_rect = play_img.get_rect()

play_rect.center = (240, 90)

screen.blit(play_img, play_rect)

forward_img = pygame.image.load("/home/pi/Final/buttons/forward1.png")

forward_img = pygame.transform.scale(forward_img, (45, 45))

forward_rect = forward_img.get_rect()

forward_rect.center = (290, 140)

screen.blit(forward_img, forward_rect)

back_img = pygame.image.load("/home/pi/Final/buttons/back1.jpeg")

back_img = pygame.transform.scale(back_img, (45, 45))

back_rect = back_img.get_rect()

back_rect.center = (190, 140)

screen.blit(back_img, back_rect)

volume_txt = pygame.font.Font(None, 18).render("Volume", True, black)

volume_rect = volume_txt.get_rect(center=(240, 210))

screen.blit(volume_txt, volume_rect)

plus_img = pygame.image.load("/home/pi/Final/buttons/plus.png")

plus_img = pygame.transform.scale(plus_img, (30, 30))

plus_rect = plus_img.get_rect()

plus_rect.center = (290, 210)

screen.blit(plus_img, plus_rect)

minus_img = pygame.image.load("/home/pi/Final/buttons/minus.png")

minus_img = pygame.transform.scale(minus_img, (30, 30))

minus_rect = minus_img.get_rect()

minus_rect.center = (190, 210)

screen.blit(minus_img, minus_rect)

return_img = pygame.image.load("/home/pi/Final/buttons/return.png")

return_img = pygame.transform.scale(return_img, (30, 30))

return_rect = return_img.get_rect()

return_rect.center = (295, 30)

screen.blit(return_img, return_rect)

pygame.display.flip()

# Helper function to handle what to display on pygame for each corresponding screen states

def display_screen(state):

global song_index, click_song_display, click_song

if state == 0:

reset_screen()

song_list_title()

hard_code_songs()

if song_index != 0:

left_arrow_button()

if song_index + 5 < len(tracks):

right_arrow_button()

if state == 1:

display_song_options()

display_song_buttons()

started_playing = False

SONG_END = pygame.USEREVENT # Setting a pygame user event to notify when a song finished playing

cur_volume = 0.5 # starting volume for the song which is half volume

# load the specific mp3 file into pygame

def music_selected(x):

pygame.mixer.init()

pygame.mixer.music.load("/home/pi/Final/mp3/" + x + ".mp3")

pygame.mixer.music.set_endevent(SONG_END)

pygame.mixer.music.set_volume(cur_volume)

#pygame.mixer.music.play()

def pause_song():

pygame.mixer.music.pause()

def unpause_song():

pygame.mixer.music.unpause()

def play_song():

pygame.mixer.music.play()

# Setting up the song playing features for a song when it is loaded in display on the piTFT

def new_song(x):

global click_song, click_song_display, song_playing, cur_song_index, started_playing

click_song_display = x

click_song = name_dt[x]

song_playing = False

cur_song_index = tracks.index(x)

music_selected(click_song)

started_playing = False

def increase_volume():

global cur_volume

if cur_volume < 1.0:

cur_volume = cur_volume + .1

pygame.mixer.music.set_volume(cur_volume)

def decrease_volume():

global cur_volume

if cur_volume > 0:

cur_volume = cur_volume - .1

pygame.mixer.music.set_volume(cur_volume)

# Helper function handler for when a button is pressed and determining the appropriate handling for each button

def button_down(pos):

global screen_state, song_index, click_song, click_song_display, song_playing, cur_song_index, started_playing, cur_gen

if screen_state == 0: # Button press handler for button presses during the start screen

if pos[0] > arrows[0][0] and pos[0] < arrows[0][1]: # for if forward button is pressed

if pos[1] > arrows[0][2] and pos[1] < arrows[0][3]:

if song_index != 0:

list_forward(False)

display_screen(0)

elif pos[0] > arrows[1][0] and pos[0] < arrows[1][1]:

if pos[1] > arrows[1][2] and pos[1] < arrows[1][3]:

if song_index + 5 < len(tracks):

list_forward(True)

display_screen(0)

elif pos[0] > 100 and pos[0] < 220: # handling button presses for each song that is displayed

if pos[1] > 55 and pos[1] < 75:

screen_state = 1

click_song_display = cur_songs[0]

map_name(cur_songs[0])

new_song(cur_songs[0])

display_screen(screen_state)

cur_gen = song_dict[click_song]["genre"][0]

elif pos[1] > 85 and pos[1] < 105:

if cur_songs[1] == None:

return

screen_state = 1

click_song_display = cur_songs[1]

map_name(cur_songs[1])

new_song(cur_songs[1])

display_screen(screen_state)

cur_gen = song_dict[click_song]["genre"][0]

elif pos[1] > 115 and pos[1] < 135:

if cur_songs[2] == None:

return

screen_state = 1

click_song_display = cur_songs[2]

map_name(cur_songs[2])

new_song(cur_songs[2])

display_screen(screen_state)

cur_gen = song_dict[click_song]["genre"][0]

elif pos[1] > 145 and pos[1] < 165:

if cur_songs[3] == None:

return

screen_state = 1

click_song_display = cur_songs[3]

map_name(cur_songs[3])

new_song(cur_songs[3])

display_screen(screen_state)

cur_gen = song_dict[click_song]["genre"][0]

elif pos[1] > 175 and pos[1] < 195:

if cur_songs[4] == None:

return

screen_state = 1

click_song_display = cur_songs[4]

map_name(cur_songs[4])

new_song(cur_songs[4])

display_screen(screen_state)

cur_gen = song_dict[click_song]["genre"][0]

if screen_state == 1: # Button press handler for handling button presses during the song screen

if (abs(pos[0] - 240) ** 2 + abs(pos[1] - 60) ** 2) ** 0.5 <= 30: # handler for play button being hit

song_playing = not song_playing

display_screen(screen_state)

if song_playing:

if not started_playing:

started_playing = True

play_song()

findPace(cur_gen)

else:

unpause_song()

findPace(cur_gen)

else:

pause_song()

stop()

# next song and previous song button

if (abs(pos[0] - 290) ** 2 + abs(pos[1] - 110) ** 2) ** 0.5 <= 30:

pause_song()

if cur_song_index != len(tracks) - 1:

new_song(tracks[cur_song_index + 1])

display_screen(screen_state)

cur_gen = song_dict[click_song]["genre"][0]

if (abs(pos[0] - 190) ** 2 + abs(pos[1] - 110) ** 2) ** 0.5 <= 30:

pause_song()

if cur_song_index != 0:

new_song(tracks[cur_song_index - 1])

display_screen(screen_state)

cur_gen = song_dict[click_song]["genre"][0]

if (abs(pos[0] - 295) ** 2 + abs(pos[1] - 30) ** 2) ** 0.5 <= 20:

pause_song()

screen_state = 0

display_screen(screen_state)

cur_gen = song_dict[click_song]["genre"][0]

if (abs(pos[0] - 290) ** 2 + abs(pos[1] - 190) ** 2) ** 0.5 <= 20: # for handling increase and decrease in volume

increase_volume()

if (abs(pos[0] - 190) ** 2 + abs(pos[1] - 190) ** 2) ** 0.5 <= 20:

decrease_volume()

# initial display

stop()

left_arrow_button()

right_arrow_button()

song_list_title()

hard_code_songs()

while 1: # main loop

if (GPIO.event_detected(27)): # program break button

break

for event in pygame.event.get(): # constantly polling for button presses

if event.type == pygame.MOUSEBUTTONDOWN:

pos = event.pos

button_down(pos)

elif event.type == SONG_END:

screen_state = 0

display_screen(0)

started_playing = False

// music.py

import ssl

from spotipy.oauth2 import SpotifyClientCredentials

import spotipy

from spotipy.oauth2 import SpotifyOAuth

from dotenv import load_dotenv

import sys

import random

import requests

from PIL import Image

load_dotenv()

# class for collecting and aggregating Spotify music data

class Music:

scope = [

'user-modify-playback-state',

'user-read-playback-state',

'user-read-currently-playing',

'playlist-read-private',

]

sp = spotipy.Spotify(auth_manager=SpotifyClientCredentials())

@classmethod

def populate_tracks(cls):

tracks = []

track_ids = [

'5mg3VB3Qh7jcR5kAAC4DSV',

'65FftemJ1DbbZ45DUfHJXE',

'3r8RuvgbX9s7ammBn07D3W',

'6Rb0ptOEjBjPPQUlQtQGbL',

'4Ws314Ylb27BVsvlZOy30C',

'1vrd6UOGamcKNGnSHJQlSt',

'0VjIjW4GlUZAMYd2vXMi3b',

'49FYlytm3dAAraYgpoJZux',

'39LLxExYz6ewLAcYrzQQyP',

'5fwSHlTEWpluwOM0Sxnh5k',

'0GjEhVFGZW8afUYGChu3Rr',

'003vvx7Niy0yvhvHt4a68B',

'6xGruZOHLs39ZbVccQTuPZ',

'2Ch7LmS7r2Gy2kc64wv3Bz',

'5Htq8DDDN0Xce9MwqzAzui',

'3vZk7OAUjMtVDNC852aNqi',

]

for i in range(len(track_ids)):

track = cls.sp.track(track_ids[i])

tracks.append(track)

return tracks

@classmethod

def get_data(cls, track):

genres = []

name = track['name']

artist = track['artists'][0]

artist_info = cls.sp.artist(artist['external_urls']['spotify'])

track_artist = artist['name']

img_link = track['album']['images'][0]['url']

img = Image.open(requests.get(img_link, stream=True).raw)

# only one time use

# img.save('{}.jpg'.format(name), "JPEG", quality=80,

# optimize=True, progressive=True)

for genre in artist_info['genres']:

genres.append(genre)

return name, track_artist, genres, img

# Aggregates individual tracks with their name, artist, genre and image and populates them in a list

@classmethod

def aggregate_data(cls, tracks):

copy = tracks.copy()

data = []

names = []

for i in range(len(tracks)):

name, track_artist, genres, img = cls.get_data(tracks[i])

track_dict = {

'name': name,

'artist': track_artist,

'genre': genres,

'image': img,

}

data.append(track_dict)

names.append(name)

return data, names

@classmethod

def find_song(cls, track):

name = track['name'].replace(' ', '')

return '{}.mp3'.format(name)

# Creates dictionary with information about the song name and data associated with the song

@classmethod

def create_music_dictionary(cls, data, names):

search_dict = {}

for i in range(len(data)):

name = names[i].replace(' ', '')

name = name.replace('&', '')

name = name.replace('.', '')

search_dict[name] = data[i]

return search_dict

music = Music()

tracks = music.populate_tracks()

data, names = music.aggregate_data(tracks)

final_dict = music.create_music_dictionary(data, names)

print(names)